Designing an AI Copilot for Customer Support

Overview

Support Copilot emerged from a broader shift toward AI-first support. As the organization accelerated toward automation and augmentation, traditional workflows and static tooling began to show their limits.

The challenge was not simply adding AI. Support work is high-stakes, and mistakes are costly. We needed to design a system advocates could trust. The goal was to augment judgment, reduce cognitive load, and increase speed without sacrificing accuracy, empathy, or control.

Reframing the Problem

Early direction from product centered on a chatbot-style interface where advocates could “chat with the copilot” to ask questions and trigger actions.

It felt intuitive, and a plausible first step. But I was concerned it would struggle in practice.

Speed is the primary performance metric for advocates. Chat is often slower than existing shortcuts and structured workflows. Many advocates are also based in South America and the Philippines, where English is not always a first language. A purely free-form interface could introduce hesitation at critical moments.

So I reframed the question. Instead of asking how advocates could talk to AI, I focused on how AI could support advocates inside their existing workflow. This expanded the design from reactive chat to proactive assistance.

Solution

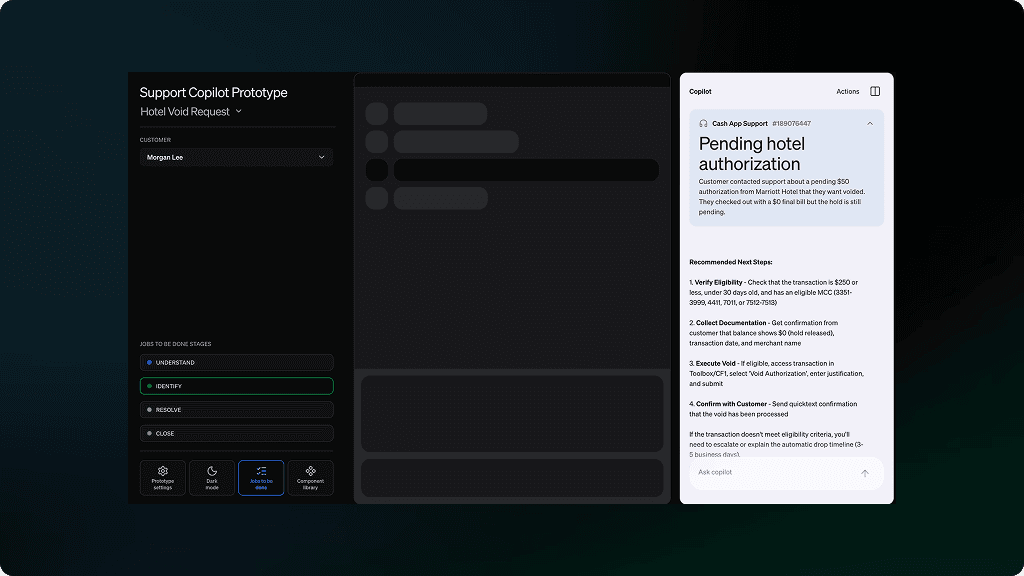

We shipped a focused core release to deliver value quickly while giving us space to evolve the strategy.

Q&A AI Chat

A real-time AI partner inside the case. Advocates ask natural language questions about customers, transactions, or policy and receive grounded answers instantly. This reduces tab switching and search time while supporting complex reasoning.

Case Summary

A structured snapshot of the issue, key events, actions taken, and current state. Advocates orient themselves in seconds, improving handoffs and resolution speed.

Actions Menu

A centralized home for workflows and system actions. It removes interface hunting and creates a stable utility layer that scales as Copilot grows.

Suggestion Pills

Context-aware prompts that eliminate the blank-cursor moment and encourage faster engagement without forcing a rigid path.

Knowledge Search

Relevant articles and workflows surfaced directly inside Copilot, reducing friction and improving policy adherence.

Together, these features established Copilot as a trusted assistant within the workflow and laid the foundation for broader change.

From reactive support to proactive guidance.

The core release made Copilot a strong reactive assistant. But reactive AI depends on the advocate knowing what to ask. The next phase shifts toward proactive guidance.

Reactive vs Proactive

In reactive mode, the advocate leads. They initiate, refine, and explore. In proactive mode, AI surfaces guidance when confidence is high.

Balancing these modes is critical. Too passive, and AI adds little value. Too aggressive, and it feels intrusive. Designing this balance has beoame central to the experience.

Proactive Workflow Assistance

Support cases follow recognizable patterns. Instead of requiring advocates to assemble the right steps manually, Copilot can detect workflows in motion and surface relevant guidance inline.

This reduces cognitive overhead and brings the right information forward at the right moment. The goal is not to replace workflows, but to make them adaptive.

Know / Say / Do

To structure proactive assistance, I framed AI support around three needs:

Know - Surface relevant policies, risk signals, and contextual insights before the advocate goes hunting.

Say - Provide suggested responses grounded in policy and case context, reducing drafting time while maintaining empathy and compliance.

Do - Recommend actions when AI confidence is high, helping advocates move decisively toward resolution.

This framework clarified where AI should inform, assist, or step back. It also gave cross-functional partners a shared language for alignment.

Impact & Reflection

This work marked a shift in how support is designed at Block. Instead of layering AI onto existing workflows, we began rethinking the interaction model itself. The team moved from viewing AI as a feature to treating it as a system-level collaborator inside the advocate experience.

Designing in this space reinforced how quickly the landscape is moving. With AI, both the product and the product development process accelerate. Prototypes become working software in days. Feedback loops compress. Roadmaps evolve mid-flight. In that environment, clear principles matter more than fixed plans.

It also reaffirmed something more durable. Even as capabilities expand, solutions still need to be grounded in real user needs. Speed does not earn trust. In high-stakes environments, adoption depends on confidence, clarity, and alignment with how people actually work.

For me, this project marked a personal evolution. I moved beyond designing individual features and into shaping how humans and AI collaborate within complex systems.